A grey box loads. And suddenly you’re asked to prove yourself. The web feels different again.

Across major UK news sites, more readers now see prompts urging them to “verify” their humanity. One prominent publisher, News Group Newspapers, warns that automated access and text or data mining fall outside its terms, and directs businesses to seek permission. Genuine users sometimes get swept up in the dragnet, which raises a pressing question: why is this happening, and what should you do when it happens to you?

What sits behind the sudden checks

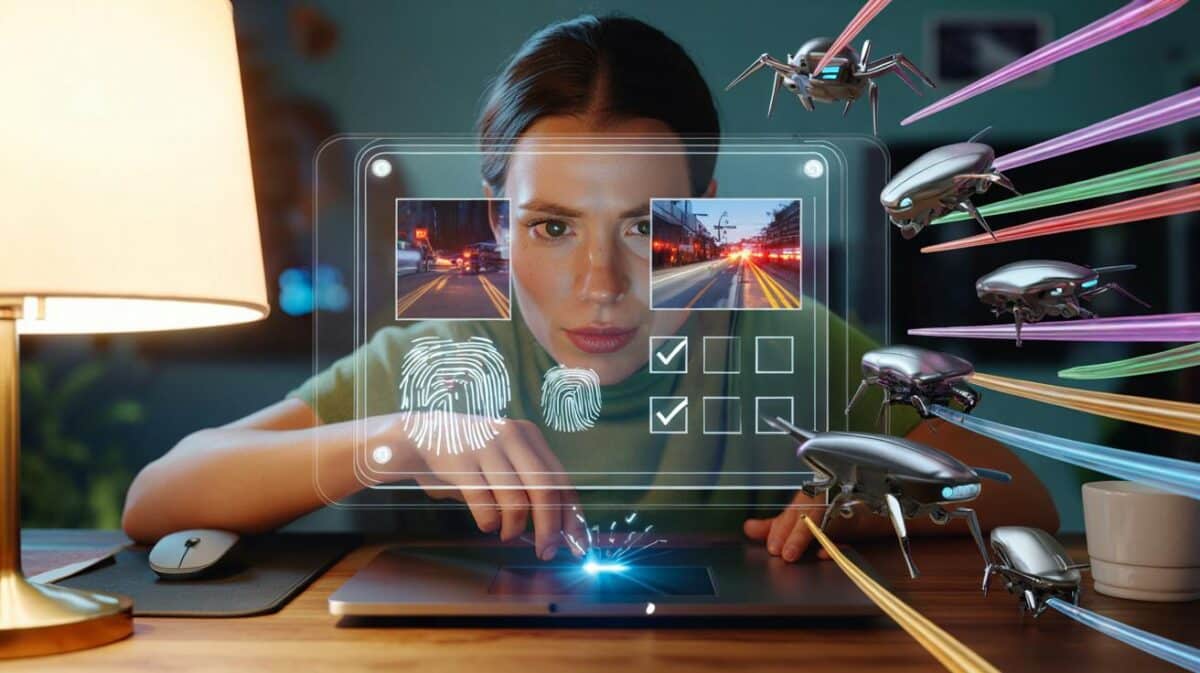

Publishers are tightening defences because automated tools have grown more sophisticated and more frequent. Behavioural signals once used to spot bots now sometimes flag genuine readers who scroll quickly, open multiple tabs or use a VPN. When a system interprets erratic clicks or rapid page requests as robot-like, it triggers challenges and, in some cases, blocks.

Automated collection for training AI or large language models sits outside permitted use on many news sites and can trigger blocks or legal notices.

The technology stack behind these prompts mixes device fingerprinting, rate limits, IP reputation, and anomaly detection. If your pattern deviates from the models of typical human browsing, the system may pause the session until you complete a test, wait, or contact support. News Group Newspapers explicitly directs legitimate users who have been misidentified to its support channel at [email protected].

Why publishers are drawing a line

Three pressures drive this shift. First, bot traffic can distort audience metrics and hurt advertising yields. Second, automated scraping can copy entire catalogues at scale, undermining paid products and rights. Third, AI developers are hungry for fresh text to train models, and publishers are responding with stricter terms, technical blocks, or licensing gates.

In practical terms, this means restrictions on scraping and text or data mining without permission, especially for commercial use. News Group Newspapers states that commercial users should request clearance via [email protected]. That stance mirrors a wider trend: rights holders want to control how, and by whom, their content is harvested and reused.

How you get caught in the net

False positives stem from certain browsing habits and tools. Quick bursts of activity can resemble scripts. Plugins can tamper with the page. Privacy tools can obscure signals that systems rely on to differentiate people from code.

- Heavy tabbing: opening many articles in seconds can look like a crawler.

- VPN or corporate network: shared or overseas IPs may carry poor reputations.

- Aggressive refresh: hammering reload after a paywall or error can trip rate limits.

- Automation helpers: extensions that prefetch pages or summarise text can trigger blocks.

- Unusual devices: headless or embedded browsers can fail human-behaviour checks.

If you are a legitimate reader who got challenged, slow down, disable extensions for the site, and try again before contacting support.

What to do if you’re blocked

There are quick fixes that resolve most challenges. If you still hit a wall, reach out to the publisher with details such as the time of the block, your IP, and a brief description of what you were doing.

| Scenario | Likely response | Best next step |

|---|---|---|

| Normal reading on mobile or desktop | Occasional prompt to verify | Complete the check; avoid rapid-fire clicks |

| Opening 15–20 tabs at once | Temporary block or slowdown | Stagger openings; use in-site navigation |

| VPN or shared office IP | More frequent challenges | Switch to a trusted connection; whitelist the site in your VPN |

| Scraping or summariser extensions | Hard block or error | Disable the extension for news domains |

| Business reuse of content | Licence required | Email [email protected] to request permission |

Legal backdrop and transparency

Terms and conditions usually prohibit automated access without consent. In the UK, copyright law permits limited copying for text and data analysis when carried out for non-commercial research by someone with lawful access. That does not grant a free pass for commercial scraping. Publishers can set technical measures, and they can pursue claims where bots breach site terms or database rights.

If you plan to ingest articles for a product or AI model, ask for permission first and obtain a licence that spells out scope, retention and attribution.

Clear permissions protect both sides. They reduce the risk of sudden access blocks and establish audit trails for compliance teams and model governance boards.

The numbers behind the crackdown

Industry reports suggest bots account for a large share of web traffic, with the proportion often sitting at around four in ten visits when both “good” and “bad” bots are counted. Not all automated access is malicious: search engine crawlers and monitoring tools perform useful functions. The friction comes from aggressive scrapers, inventory fraud, and model training pipelines that pull large volumes of text without consent.

- Revenue risk: misattributed traffic depresses ad rates and skews campaign performance.

- Operational strain: bursty scraping hammers servers, raising costs and slowing pages.

- Rights control: publishers seek to manage where their words end up and on what terms.

- Trust: readers expect accurate metrics and a site that loads quickly and consistently.

What this means for you and your privacy

Verification steps rely on behavioural signals and device hints that your browser already exposes. Many checks complete silently in the background. When they fail, you may see a challenge or a hold page. Choose reputable privacy tools and avoid stackable plugins that modify pages, as they can break scripts and look suspicious to detection systems.

If you have accessibility needs, challenges can be frustrating. Use the support channels to flag the issue. State what assistive technology you use so the publisher can tune its systems and offer alternatives.

A quick self-check to reduce blocks

Try this short routine when the web keeps asking if you are real. It helps rule out the usual culprits before you escalate.

- Disable summariser and prefetch extensions on news domains.

- Switch off your VPN or pick a UK endpoint with a clean reputation.

- Sign in if the site supports accounts; logged-in sessions look steadier.

- Open fewer tabs per burst and let pages fully load.

- If blocked again, email [email protected] with the time and any reference code on the page.

Key terms, made simple

Bot: software that performs automated actions on the web, from indexing to scraping. Some bots help, some harm. CAPTCHA: a short challenge that tries to tell people from code, sometimes replaced by invisible checks. Rate limiting: a rule that slows or stops requests when they arrive too quickly. Text and data mining: techniques that derive patterns from large sets of documents by copying and analysing them.

For researchers and start-ups, a small pilot with openly licensed text can validate an approach before you seek broader rights. For larger projects, prepare a data map, retention policy, and a plain-English description of your use case. That speeds up permissions and reduces the chance your pipeline gets blocked mid-build.

Got asked to prove I’m human after scrolling too fast. Guess my caffeine turned me into a bot. 🙂 Any practical tips for VPN users besides ‘just switch it off’?