You are not alone, and the timing rarely helps.

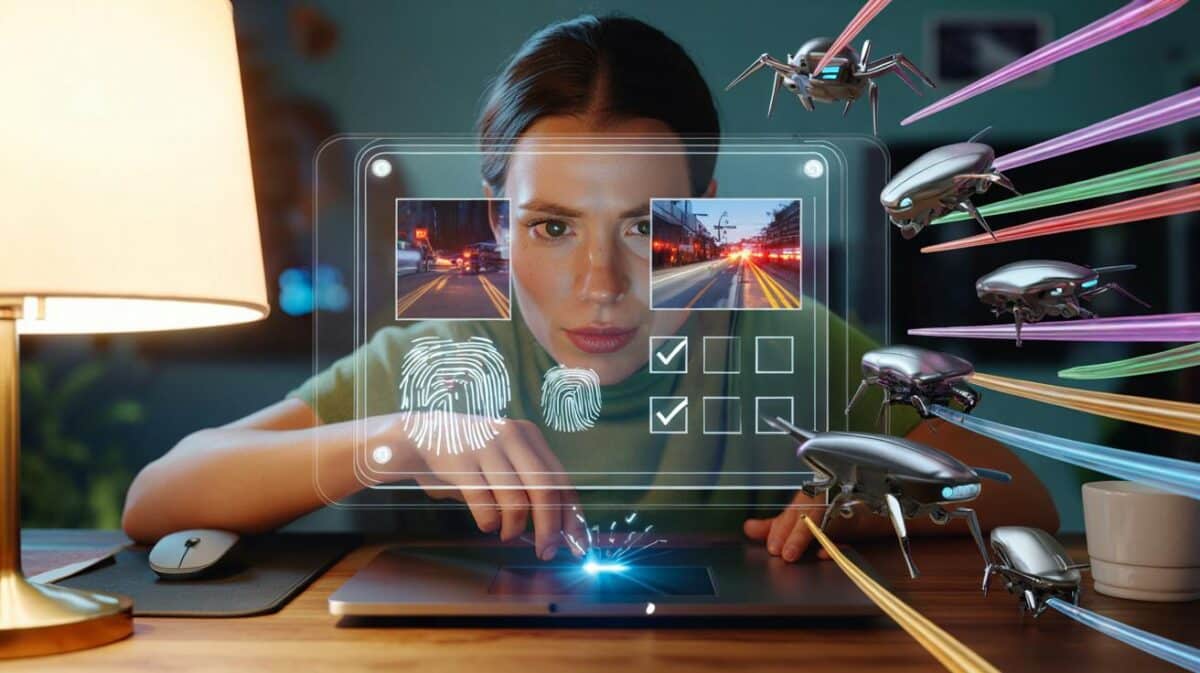

Across major news sites, readers report sudden checks asking them to prove they are human. The message looks simple. The mechanics behind it are anything but.

Why you are seeing “help us verify you as a real visitor” today

Publishers are grappling with a surge in automated traffic that scrapes articles, hoards images and hammers live pages. To cope, they deploy bot-detection systems that challenge anything that feels off. That includes your browser settings, connection type and how fast you click or scroll.

One prominent example comes from the News Broadcasting and Onic group of companies, which includes talkSPORT Limited. Its terms and conditions prohibit automated access, collection, and text or data mining of content by bots or intermediary services. The policy sits on a simple principle: people can read; software cannot, unless given permission.

Automated access and data mining are barred without permission. If behaviour looks robotic, access stops until a human proves presence.

During breaking stories or big matches, bot managers tighten thresholds. That is when a routine refresh can trigger a gate. Anecdotal figures from traffic logs at several publishers suggest that during peak surges, roughly a third of page loads will face a verification step. Most pass in seconds. Some do not.

The numbers behind the checks

Industry studies estimate that around half of web traffic is automated. A sizeable portion is classed as “bad bots” that aggressively scrape or attempt fraud. In news publishing, spikes create hot moments where the balance tilts further towards automation. Internal dashboards at large outlets show automated request rates rising by double digits during live events.

That context shapes the reader experience. If the system rates your session as risky, it raises a challenge. It can be a quick puzzle. It can be a time delay. If signals remain odd, you may see a block.

During traffic spikes, publishers report verification challenges on up to 36% of attempted reads, especially on live pages and scores.

Seven reasons your visit looks automated

- Virtual private networks or proxies: shared IPs often match known bot infrastructure.

- Disabled JavaScript or blocked cookies: bot managers need both to assess behaviour and continuity.

- Aggressive refreshing: reloading every second mimics scraping and triggers rate limits.

- Multiple tabs hitting the same page: concurrent requests from one device can resemble a crawler.

- Privacy or ad-blocking extensions that rewrite requests: some change headers and break normal patterns.

- Headless or unusual browsers: some automation frameworks leave telltale fingerprints.

- Time zone and device mismatch: a UK news app with a toolset suggesting a distant data centre looks suspicious.

Quick fixes help: enable JavaScript, allow cookies, pause your VPN and slow your refresh rate during live updates.

What this costs you and publishers

The gatekeeper adds friction. It also saves cash. Automation eats bandwidth, drives server bills and can distort ad reporting. Here is a snapshot of the trade-offs.

| Impact | For readers | For publishers |

|---|---|---|

| Time | 15–60 seconds per challenge; longer if repeated | Reduced server load during surges; lower latency for real visitors |

| Data | Extra 100–500 KB for challenge scripts and images | Savings of 10–30% bandwidth when bots are curtailed |

| Access | Occasional false positives; temporary lockouts | Fewer scraping incidents; more accurate audience metrics |

| Revenue | Interrupted sessions and abandoned reads | Cleaner ad delivery; less invalid traffic risk |

The legal backdrop in Britain

Terms and conditions are the first guardrail. Breaching them can lead to blocked access and legal claims. British law also recognises copyright in articles and database rights in compiled content. Systematic scraping can infringe those rights if carried out without permission.

There is a narrow exception that allows text and data mining for non-commercial research with lawful access. Commercial mining sits outside that scope. Many publishers actively reserve their rights and provide a channel to request a licence for crawling or API use.

Commercial text and data mining requires prior permission. Unauthorised scraping risks breach of contract, database right infringement and formal action.

How to pass the check in under two minutes

- Turn off your VPN or switch to a less congested exit node.

- Enable JavaScript and allow first-party cookies for the site.

- Close duplicate tabs that auto-refresh the same live page.

- Disable aggressive privacy extensions on news domains you trust.

- Stop rapid-fire reloading; wait 15–30 seconds between refreshes.

- Restart the browser to clear corrupted sessions and service workers.

- If blocked repeatedly, wait 10–20 minutes. Rate limits often reset.

When to contact the publisher

If your device gets flagged day after day, reach out. Provide your approximate time of block, device type and browser version. Ask whether your IP range sits on a deny list used against bots. For researchers or businesses seeking bulk access, request written permission, rate limits and a clear scope. Some groups, including those behind talk-focused brands, publish a dedicated address for crawling permissions.

What publishers look for behind the scenes

Modern bot managers score behaviour across dozens of signals. They watch mouse movement, scroll rhythm and timing between clicks. They compare your device fingerprint with known automation frameworks. They examine the reputation of your network and how often requests repeat across paths.

False positives happen. A gamer’s high-refresh rig can look erratic. Corporate security tools can mask traffic in ways that resemble proxies. That is why you occasionally face a challenge despite doing nothing wrong. The aim is to confirm you are human, then lower friction on your next page view.

Suspicion comes from patterns, not from a single click. Small tweaks to your setup can tip the score back in your favour.

A quick reality check with numbers

Open five tabs on a live blog and refresh every two seconds for ten minutes. That is 1,500 requests from one device. Add a VPN exit shared by hundreds of users and a privacy extension that alters headers. The system now sees fast repetition, noisy identity and a crowded IP. A challenge is inevitable, even if your intent is innocent.

Practical extras you can use today

Set a reading routine for live pages. Refresh every 30–60 seconds, or use a single tab and rely on the built-in auto-update. If a site offers a logged-in mode, sign in; stable sessions reduce false positives. On mobile, keep to the default browser where possible, as its WebView tends to play nicely with verification scripts.

For small teams that need data, consider a licensed feed or a lightweight API. Agree a crawl window outside peak hours and a maximum request rate. Cache responses to cut duplication. Beyond legal peace of mind, you gain cleaner data and avoid tripping alarms that take days to clear.

Readers want fast access. Publishers want to protect their work and keep pages stable. The “verify you are real” prompt sits between those aims. With a few adjustments—both technical and behavioural—you can dodge the roadblocks and keep reading when it matters most.

Great explainer, thanks!